Maximum entropy principle, Copy lemma and information inequalities

The Maximum entropy principle (MEP or MAXE) is a principle in statistical mechanics. It is applied in situations where partial information about a complex system (i.e., a joint distribution of some random variables) is available, for instance some of its marginals can be measured. Among all distributions which are compatible with these constraints, MAXE suggests to select the one with maximal (Gibbs) entropy. This is the same as distributing probability mass as equally as the constraints allow.

This article is about its application to information theory where some marginal entropies of a system of random variables are prescribed. Crucially, it turns out that the maximum-entropy distribution under such assumptions satisfies certain “special position relations”. MAXE is used as a tool to tweak a given distribution into satisfying more independence relations at the expense of, naturally, changing some of its marginal entropies. This creates a trade-off between imposing independence relations and preserving marginal entropies which can sometimes be exploited. An example of this is given at the end.

This result is not new. In fact, it is a widely used technique and usually appears under the name Copy lemma, although the formulation I want to present here appears to be slightly more general. All of this is based on conversations I had at WUPES ’25 with Laszlo Csirmaz and Milan Studený as well as when I visited Andrei Romashchenko.

Basics of the almost-entropic region

We will work in the almost-entropic region. To get there, fix a finite ground set and consider discrete random vectors , where the can have any finite number of states. This vector determines a set function which sends to the Shannon entropy of the marginal random vector . This function is the entropy profile of . The collection of all entropy profiles of -variate discrete distributions is the entropy region and its closure in the euclidean topology is a convex cone called the almost-entropic region.

The elements of the dual cone of are known as information inequalities. They are essential in proving bounds for optimization problems in information theory and thus certify the optimality of a given communication protocol.

The most fundamental information inequalities come from the non-negativity of conditional mutual information. This just means that entropy profiles are monotone with respect to set inclusion and submodular over the boolean lattice. Additionally . Linear combinations of these basic inequalities are called Shannon-type inequalities. We will use the following abbreviations

- For we use and interchangeably.

- For we write .

- .

- .

The basic inequalities are the non-negativities of all possible and . We will also treat the symbols and as functionals on the space .

Maximum entropy principle

We will need a basic and well-known construction:

Conditional product lemma. Let be a finite set, and pairwise disjoint sets . There exists such that for all , all and all , and also .

Proof. Let be a system of random variables with and let denote its probability density function. Denote the state space of any marginal by and set . We define a new density by

where . Let be the random variable defined by and . It is easy to see that the maringal distributions and coincide with the respective marginals of , so in particular takes the same values as on all of their subsets. Moreover, each conditional density , for , factors into and which proves the slightly stronger conditional independence . Using the semigraphoid axioms (which follow from the submodular inequalities) yields which is equivalent to the required equation on conditional entropies.

First note that whenever is a strict subset of , we may add the difference to either or and obtain a stronger result. Secondly, the result also holds for almost-entropic points by continuity.

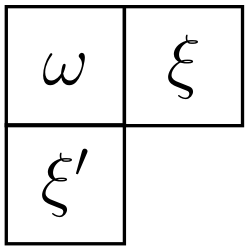

With a fixed finite set, consider a simplicial complex over . Pairwise disjoint are dependent in if there exists which intersects two distinct ’s; otherwise they are independent.

Maximum entropy principle. Let and be given with . There exists such that for all and for all independent and .

Proof. Let be a maximizer of subject to the constraints for . This is a linear optimization problem over the closed convex cone . By submodularity, we have the upper bound , where are the facets of , since . Hence, the domain is compact and such a maximizer exists.

Take any collection of sets as in the claim. If the claim is violated, then the basic inequalities imply . By dividing the ’s into two parts, we may assume that and call and . Thus . The conditional product of and over yields with

At the same time, preserves the value of on all subsets of and of . Consider any . If it were not a subset of either of the two, then it must contain an element as well as an element . But this directly contradicts the independence of and in which follows from the independence of the initial -tuple . Hence is feasible in the optimization problem we set up and contradicts the maximality of .

This proof is non-constructive but it yields multiple instances of the conditional product independence statement simultaneously. An important (sort of) special case is the Copy lemma which is really just a reformulation of the conditional product:

Copy lemma. Let be two random variables. This system can be extended to a triple such that has the same distribution as and .

The picture to have in mind is that a copy of the variables is created and then this copy is amalgamated with the original over in such a way that and are “orthogonal” (i.e., independent) given the they share.

It is worth noting that the conditional product does not increase the state space of the random variables. We have no such control in the maximum entropy principle.

Application: strengthening information inequalities

I learned the following example from Laszlo Csirmaz. Let denote the Ingleton functional. It may be checked by linear programming that the following is a valid Shannon inequality for 5 random variables:

This can be checked using oXitip using the one-line query "I(C;D) <= I(C;D|A)+I(C;D|B)+I(A;B)+I(B;Z|C)+I(C;Z|B)+I(B;C|Z) + 3 I(Z;A,D|B,C)".

It appears possible that the left-hand side, let’s call it , could become negative, but this actually cannot happen due to MAXE! Suppose that there is an almost-entropic point such that . Let be the simplicial complex generated by the marginals appearing in and notice that is independent of in , i.e., neither nor appear in any entropy evaluation in . We can apply MAXE to obtain an almost-entropic point with and . But this point will contradict the Shannon inequality above which is absurd.

This reasoning proves the stronger inequality

which simplifies to the well-known Zhang–Yeung inequality for . This is very close to how Zhang and Yeung originally proved their inequality which was the first example of a non-Shannon information inequality.

For the purposes of proving unconditional information inequalities, the distinction between entropic and almost-entropic points is immaterial. Thus, MAXE appears to be more general than the Copy lemma. As far as I am aware, there is no known instance to date of a valid information inequality which can be proved with MAXE but not with iterated applications of the Copy lemma. In fact, even independently of MAXE, I do not know of any valid information inequality which cannot be proved by iterated use of the Copy lemma.

Addendum (16 Sep 2025): Laszlo Csirmaz has recently published a manuscript on Exploring the entropic region which discusses this topic, among others, in more detail.